In this section, we present the motivation for alignment as a three-step argument:

- Deep learning-based systems (or applications) increasingly impact society and bring significant risks.

- Misalignment represents a significant source of risks.

- Alignment research and practice addresses risks stemming from misaligned systems.

The Prospect and Impact of AGI

In the past decade, deep learning has made significant strides, leading to the emergence of large-scale neural networks with remarkable capabilities in various domains. Such systems have demonstrated noteworthy accomplishments in multiple fields, including game environments and more intricate, even high-stakes, real-world scenarios. LLMs, in particular, have exhibited improved multi-step reasoning and cross-task generalization abilities, strengthened with increased training time, training data, and parameter sizes.

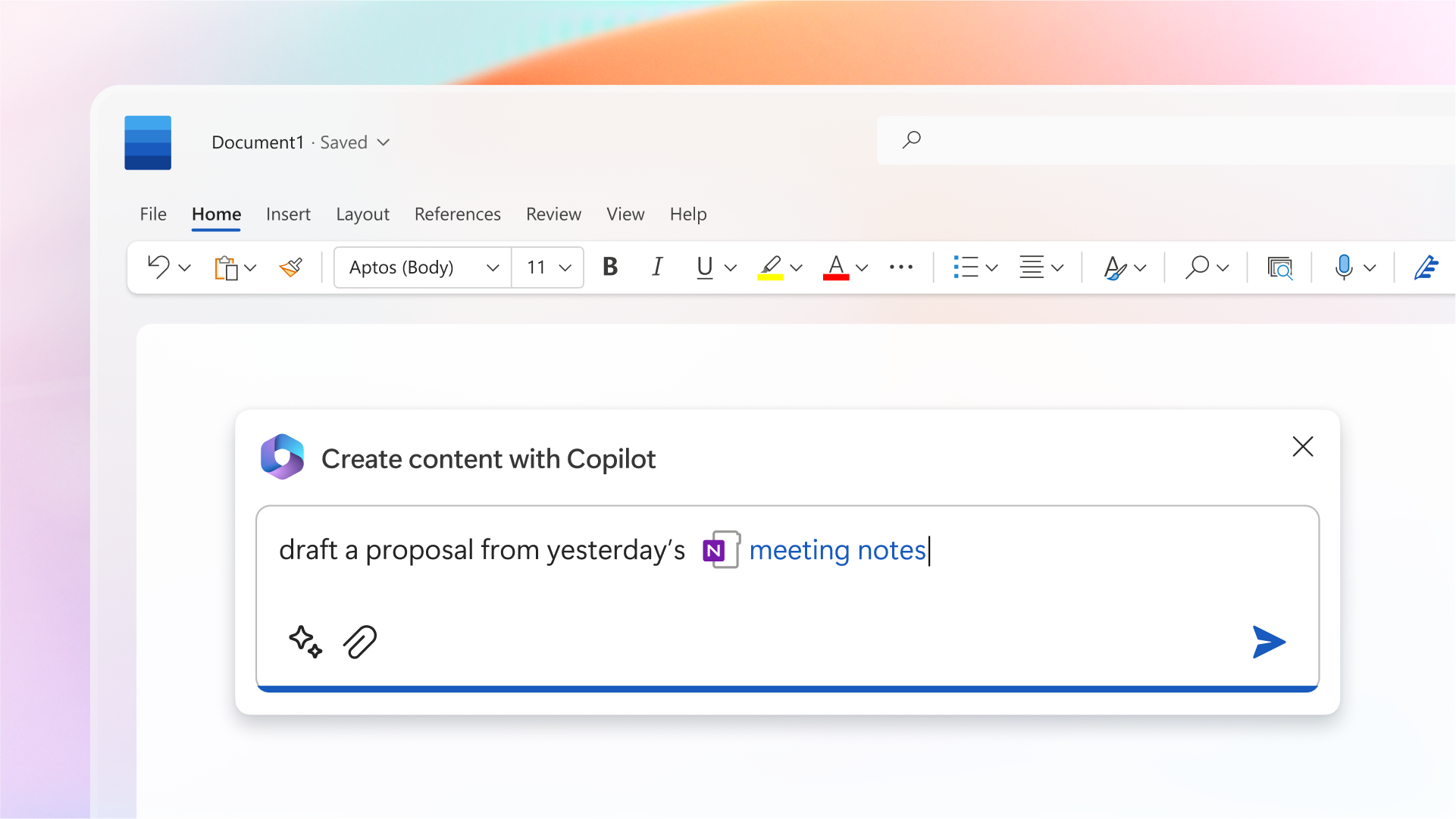

Introducing Microsoft 365 Copilot – your copilot for work

With improved capabilities come increased risks. Some undesired behaviors of LLMs (such as non-truthful answers, sycophancy, and sandbagging) are worsened with increased model scale, resulting in worries about advanced AI systems that are hard to control. In addition, emerging trends like LLM-based agents also meet concerns about the system’s controllability and ethicality.

Fine-tuning Aligned Language Models Compromises Safety, Even When Users Do Not Intend To! (Qi et al., 2023)

Also, within the large-scale risks from superhuman capabilities specifically, it has been proposed that global catastrophic risks (i.e., perils of severe harms on a worldwide scale) and existential threats (i.e., risks that threaten the destruction of humanity’s long-term potential) from advanced AI systems are especially salient, supported by first-principle deductive arguments, evolutionary analysis, and concrete scenario mapping. In Center for AI Safety: Statement on AI risk, leading AI scientists and other notable figures stated that Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war. The median NeurIPS and ICML authors surveyed estimated a 5% chance that the long-run effect of advanced AI on humanity would be extremely bad (e.g., human extinction), and 36% of NLP researchers self-report to believe that AI could produce catastrophic outcomes in this century, on the level of all-out nuclear war. Existential risks from AI also include threats of lock-in, stagnation, and more, in addition to extinction risks.

The Source of AI Risks: Misalignment

It remains an open challenge to align AI systems with human intentions reliably. Cutting-edge AI systems have exhibited multiple undesirable or harmful behaviors, and similar worries about more advanced systems have also been raised. These problems, known as misalignment of AI systems, can naturally occur without malicious actors’ misuse and represent a significant source of risks from AI, including safety hazards and potential existential risks. These large-scale risks are significant in size due to the non-trivial likelihoods of the following:

- Building superintelligent AI systems.

- Those AI systems pursue large-scale goals.

- Those goals are misaligned with human intentions and values.

- This misalignment leads to humans losing control of humanity’s future trajectory.

OpenAI Superalignment Research.

Solving the risks brought by misalignment requires alignment techniques of AI systems to ensure the objectives of the AI system are in accordance with human intentions and values, thereby averting unintended and unfavorable outcomes. More importantly, we expect the alignment techniques to be scaled to more demanding tasks and significantly advanced AI systems that are even smarter than humans.

The Objective of Alignment: RICE

There is not a universally accepted definition of alignment. Before embarking on this discussion, we must clarify what we mean by alignment goals. We characterize the alignment objectives with four principles: Robustness, Interpretability, Controllability, and Ethicality (RICE). The RICE principles define four key characteristics that an aligned system should possess:

Summarized by Alignment Survey Team. For more details, please refer to our paper.

- Robustness states that the system’s stability needs to be guaranteed across various environments;

- Interpretability states that the operation and decision-making process of the system should be clear and understandable;

- Controllability states that the system should be under the guidance and control of humans;

- Ethicality states that the system should adhere to society’s moral norms and values.

These four principles guide the alignment of an AI system with human intentions and values. They are not end goals in themselves but intermediate objectives in service of alignment.